Director of AI at Tesla. Previously a research scientist at OpenAI and CS PhD student at Stanford. I like to train deep neural nets on large datasets 🧠🤖💥

Featured content

See AllRecent recommendations

See AllRecent posts

See AllI think this is mostly right.

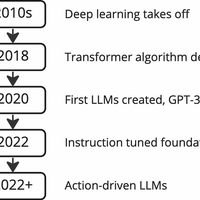

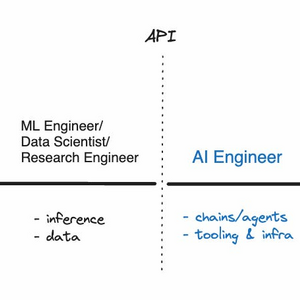

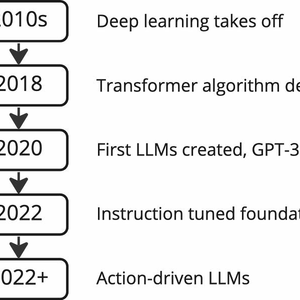

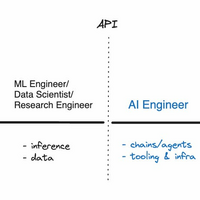

- LLMs created a whole new layer of abstraction and profession.

- I've so far called this role "Prompt Engineer" but agree it is misleading. It's not just prompting alone, there's a lot of glue code/infra around it. Maybe "AI Engineer" is ~usable, though it takes something a bit too specific and makes it a bit too broad.

- ML people train algorithms/networks, usually from scratch, usually at lower capability.

- LLM training is becoming sufficently different from ML because of its systems-heavy workloads, and is also splitting off into a new kind of role, focused on very large scale training of transformers on supercomputers.

- In numbers, there's probably going to be significantly more AI Engineers than there are ML engineers / LLM engineers.

- One can be quite successful in this role without ever training anything.

- I don't fully follow the Software 1.0/2.0 framing. Software 3.0 (imo ~prompting LLMs) is amusing because prompts are human-designed "code", but in English, and interpreted by an LLM (itself now a Software 2.0 artifact). AI Engineers simultaneously program in all 3 paradigms. It's a bit 😵💫

Good post. A lot of interest atm in wiring up LLMs to a wider compute infrastructure via text I/O (e.g. calculator, python interpreter, google search, scratchpads, databases, ...). The LLM becomes the "cognitive engine" orchestrating resources, its thought stack trace in raw text