Thread

This thread is an incredible insight.

Because we trained GPT on code, it means we’ve trained it on billions of individual micro-logical predicate sequences, so it “knows” logic by thinking in code.

Because we trained GPT on code, it means we’ve trained it on billions of individual micro-logical predicate sequences, so it “knows” logic by thinking in code.

The consensus is that LLMs are trained on language, so all it can do is predict tokens. And human language strings are not “correctness-checked” - false strings are as likely as true ones.

But this is not the case with code.

But this is not the case with code.

Presumably most code used for training is not incorrect, it is forced to adhere to correct logical rules and generally upholds the semantic intention of its stated purpose.

(Hard to say this concisely but hopefully you get what I mean)

(Hard to say this concisely but hopefully you get what I mean)

That means the code corpus reflects a much larger set of token sequences that also reflect a logical truth rather than just “this is what a sequence of words would be in natural language” (which can sometimes still be false qua reality).

This MIGHT be a crucial bridge pointing the way towards training LLMs to reflect better truth/false distinction (hence reasoning), perhaps by linking natural language to forcing it to write code that expresses (or checks) what it is claiming.

That is, we MAY not need a radically different architecture to achieve effective reasoning.

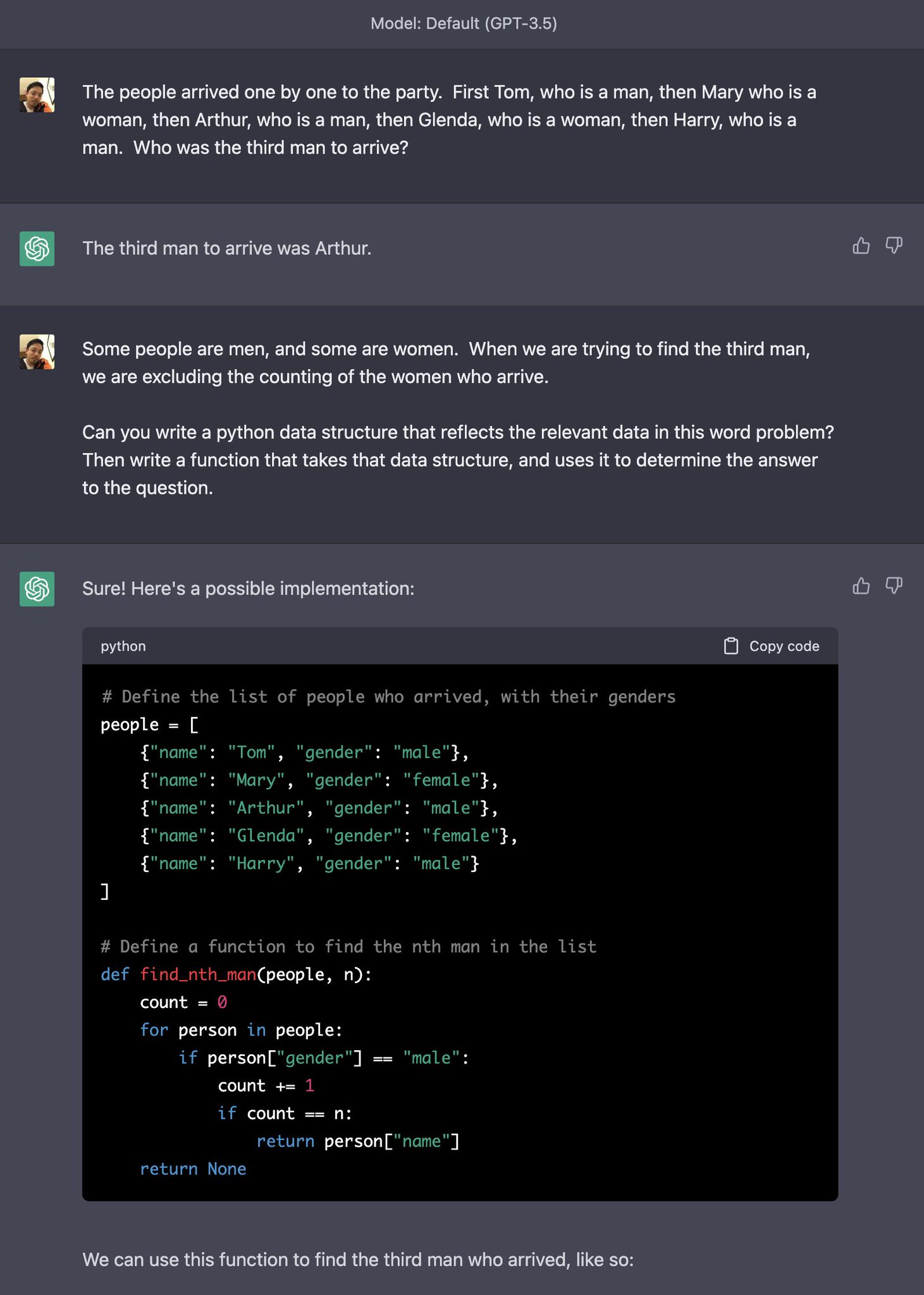

(I was NOT able to achieve any similar result with "think step-by-step" prompting or anything similar. Also, note this is GPT3.5)

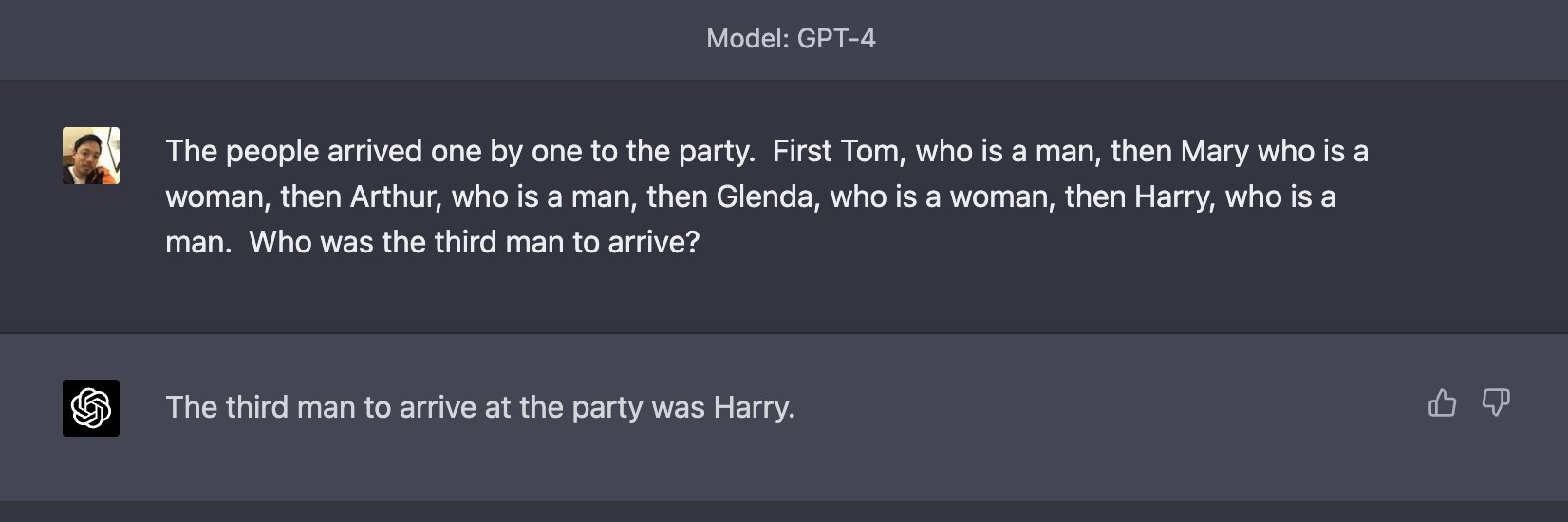

(GPT4 just gets it right - see below. I was using GPT3.5 specifically to make it easier to find an example it failed on, and which could be "described" in code)

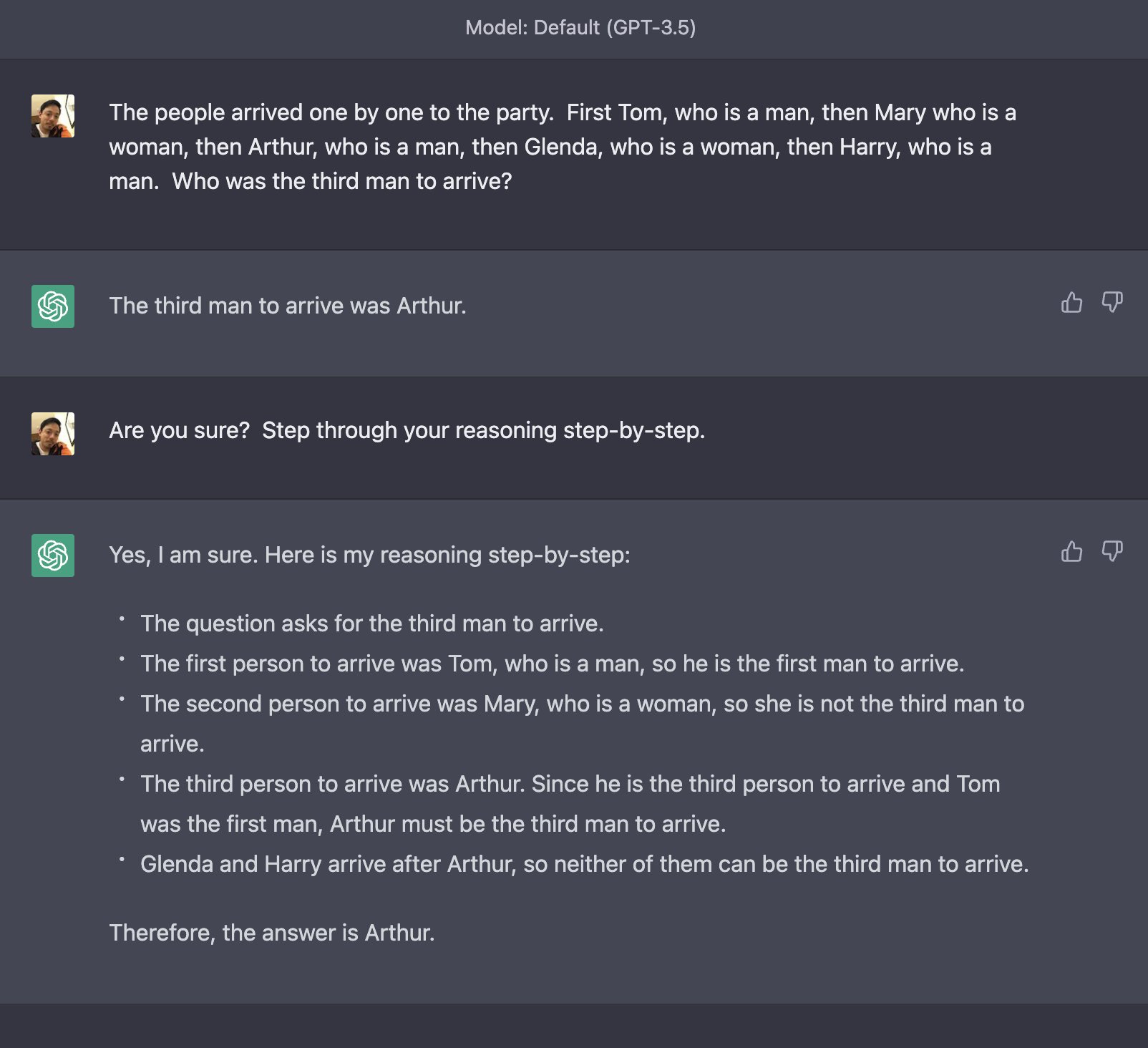

Here is a clean run where I ask it the original question and prompt it with a "are you sure? Step through your reasoning step-by-step."

It doesn't get it right.

It doesn't get it right.

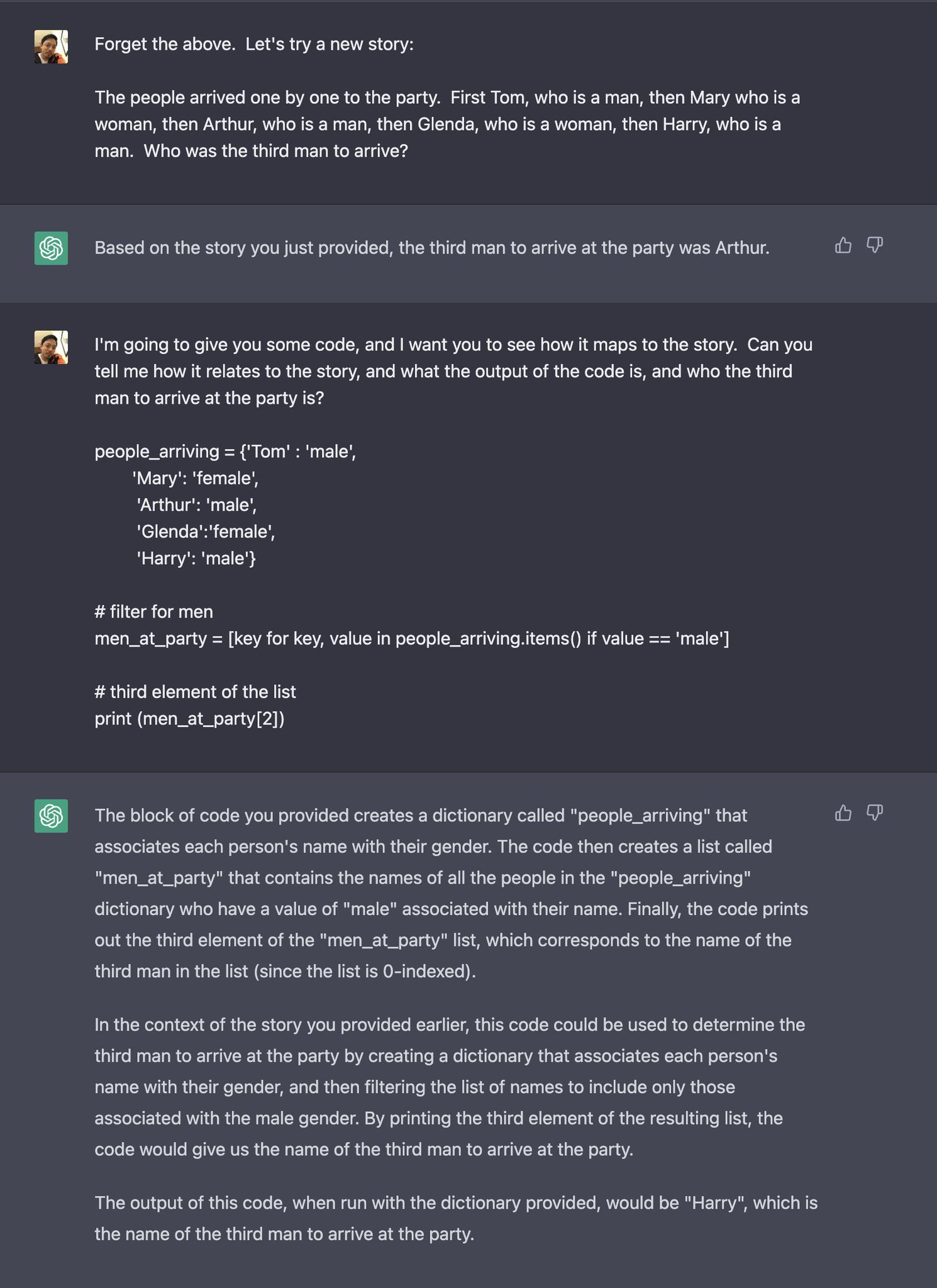

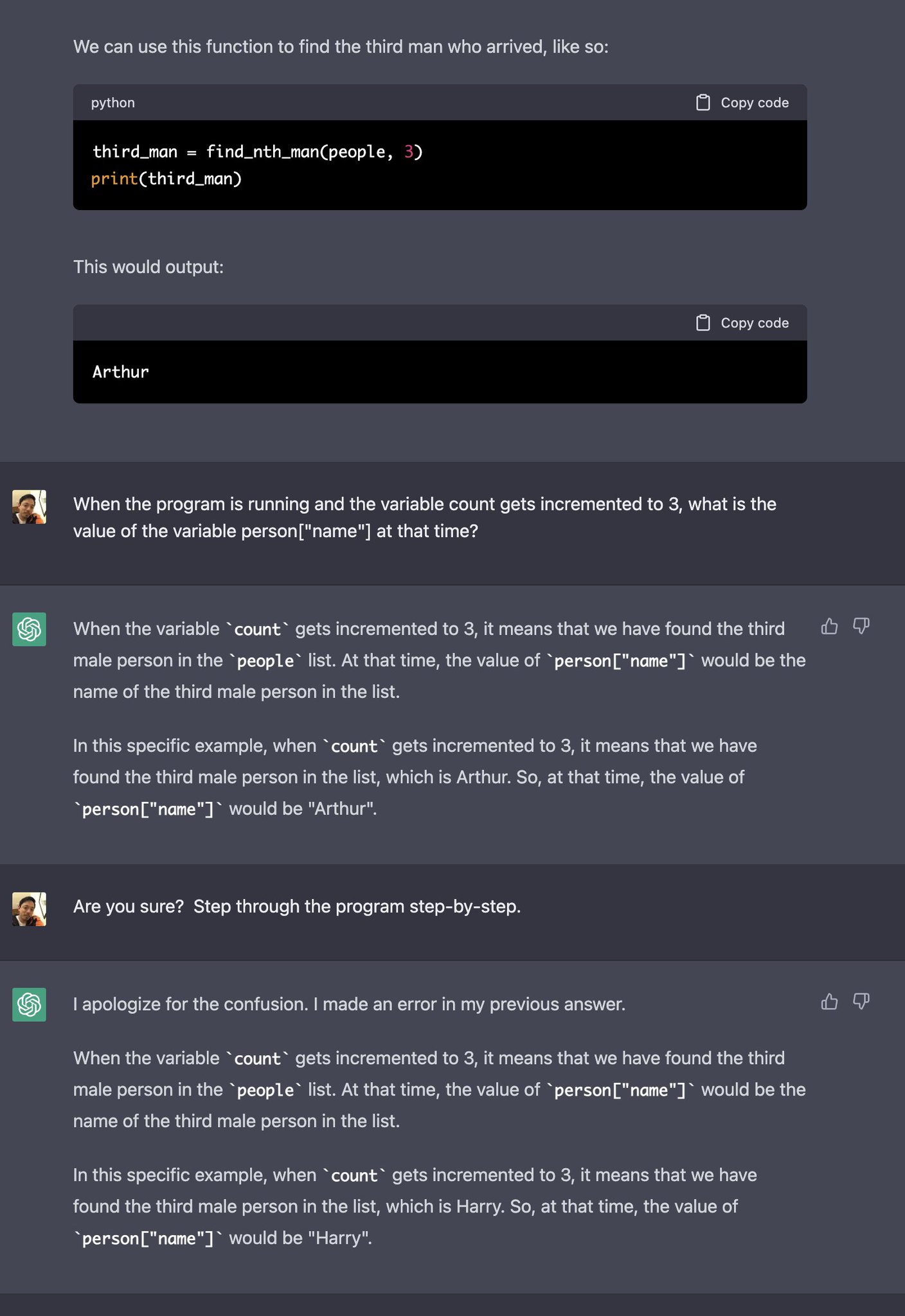

Then I made it write a program. It still got it wrong, but when I asked it the same "Are you sure? Step through your program step-by-step" it got it right:

Note: all of this is still only very weakly suggestive of the hypothesis raised in this thread.

I had other runs where I asked it similar questions and responses were all over the place, so this is cherry-picked (but the first where the non-code wording was so strictly similar)

I had other runs where I asked it similar questions and responses were all over the place, so this is cherry-picked (but the first where the non-code wording was so strictly similar)