Thread

You have seen numerous computer vision applications 🏙.

Most of them involve CNNs in one form or another 🔥.

Here's how CNNs work in 7 steps:

--A Thread--

🧵

Most of them involve CNNs in one form or another 🔥.

Here's how CNNs work in 7 steps:

--A Thread--

🧵

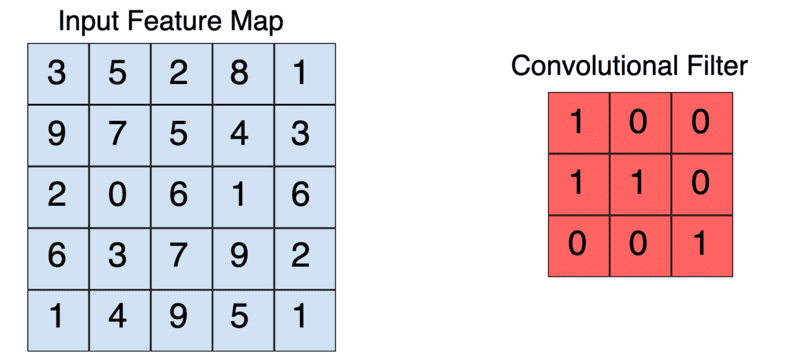

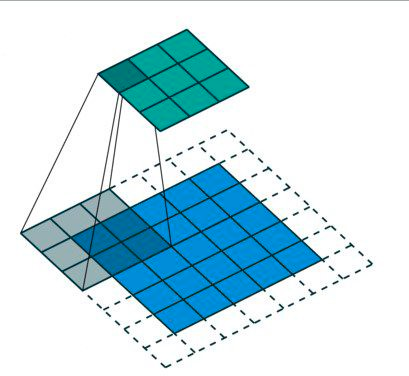

Step 1: Convolution

Convolution decreases the size of an image to reduce processing time, and the amount of compute required.

The convolution operation results in a feature map.

Convolution works by passing a filter over the input image.

Images: Google Developers

Convolution decreases the size of an image to reduce processing time, and the amount of compute required.

The convolution operation results in a feature map.

Convolution works by passing a filter over the input image.

Images: Google Developers

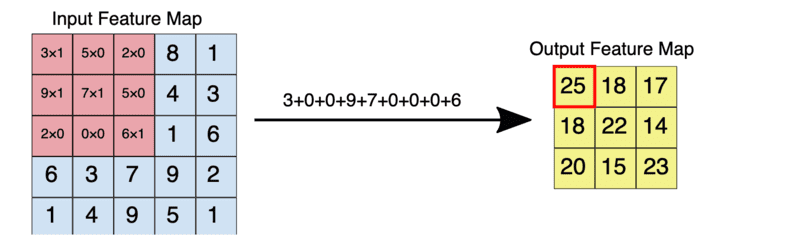

Given the above input image and filter, the convolution operation looks like this:

• 3x1 + 5x0 + 2x0 + 9x1+7x1 + 5x0 + 2x0 + 0x0 + 6x1 =3+0+0+9+7+0+0+6= 25

• Slide the kernel through the entire input image to obtain all the values as we have done above.

• 3x1 + 5x0 + 2x0 + 9x1+7x1 + 5x0 + 2x0 + 0x0 + 6x1 =3+0+0+9+7+0+0+6= 25

• Slide the kernel through the entire input image to obtain all the values as we have done above.

The kernel moves over the input images through steps known as strides.

The number of strides is defined while designing the network.

The number of strides is defined while designing the network.

Step 2: Padding

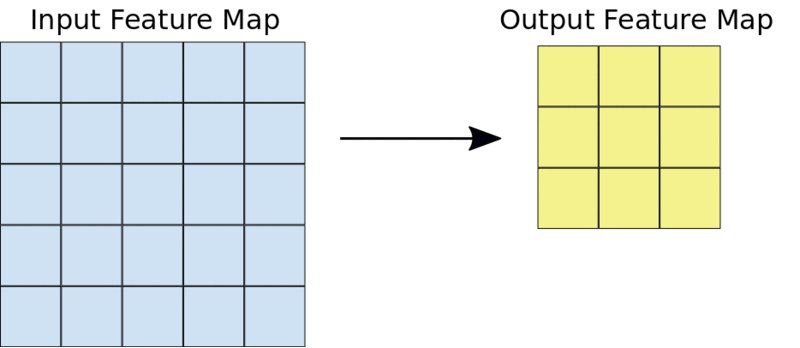

Padding is applied to maintain the size of the feature map as the input image.

Padding increases the size of the input image by adding zeros around the image such that when the kernel is applied, the output has the same size as the input image.

Padding is applied to maintain the size of the feature map as the input image.

Padding increases the size of the input image by adding zeros around the image such that when the kernel is applied, the output has the same size as the input image.

Step 3: Apply ReLU

The Rectified Linear Unit (ReLU) is applied during the convolution operation to ensure no-linearity.

Applying ReLU forces all values below zero to zero while the others are returned as the actual values.

The Rectified Linear Unit (ReLU) is applied during the convolution operation to ensure no-linearity.

Applying ReLU forces all values below zero to zero while the others are returned as the actual values.

Step 4: Pooling

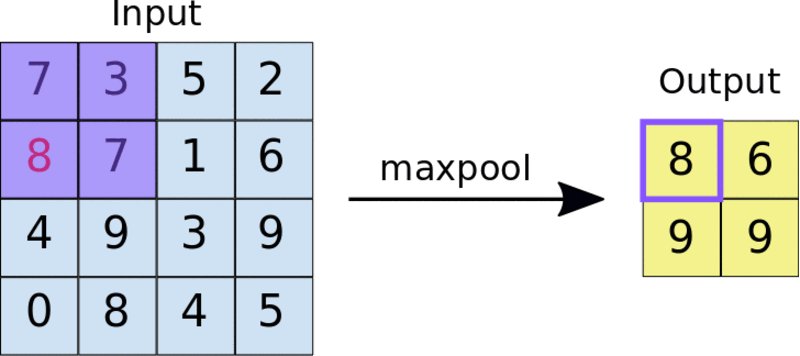

Pooling reduces the size of the feature map further by applying another filter.

In max pooling, the filter slides over the feature map picking the largest value in each box.

Pooling results in a pooled feature map.

Pooling reduces the size of the feature map further by applying another filter.

In max pooling, the filter slides over the feature map picking the largest value in each box.

Pooling results in a pooled feature map.

Step 5: Dropout regularization

It is usually good practice to drop some connections between layers in CNNs to prevent overfitting.

Dropout regularization forces the network to identify essential features needed to identify an image and not memorize the training data.

It is usually good practice to drop some connections between layers in CNNs to prevent overfitting.

Dropout regularization forces the network to identify essential features needed to identify an image and not memorize the training data.

Step 6: Flattening

Flatenning converts the pooled feature map into a single column that can be passed to the fully connected layer.

Flattening results in a flattened feature map.

Flatenning converts the pooled feature map into a single column that can be passed to the fully connected layer.

Flattening results in a flattened feature map.

Step 7: Full connection

The last step is to pass the flattened feature map to some fully connected layers, with the last layer being responsible for generating the predictions.

The last layer takes an activation function depending on the output desired.

The last step is to pass the flattened feature map to some fully connected layers, with the last layer being responsible for generating the predictions.

The last layer takes an activation function depending on the output desired.

That's it for today.

Follow @themwiti for more content on machine learning and deep learning.

Retweet the first tweet for reach.

Follow @themwiti for more content on machine learning and deep learning.

Retweet the first tweet for reach.

Mentions

See All

RS Punia🐍 @CodingMantras

·

Mar 20, 2023

Great Share!